The robots.txt file is useful in blocking off some of the pages of your blog/site from search engine crawlers. In this article, we will take a look at some of the commonly asked question about the robots.txt file, with a particular focus on Blogger blogs.

The questions are ordered with a logical flow so that you can read them from top to bottom as well.

What is a Robot?A Robot (aka wanderer, crawler or spider) is a computer program that traverses the web automatically.

Even though these names give you the feeling that these programs “travel around” the Internet, they really don’t travel from computer to computer. What they, in fact, do is to

follow the hyperlinks found on web pages by issuing download requests for each of those hyperlinked pages.

Crawling, however, is a separate topic that falls beyond the scope of this article.

What is the robots.txt file?This is a simple ASCII text file and its name must be written in all lowercase letters as robots.txt. It should be located at the root directory of your domain. Usually, in a website, this is where you keep your index.html file.

In Blogger blogs, this is located at the following address.

http://<your-blog-name>.blogspot.com/robots.txtFor example, if your blog name is

my-great-blog, then your robots.txt file can be viewed by typing the following address in to browser’s address bar.

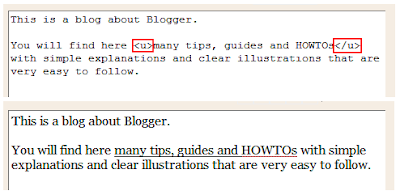

http://my-great-blog.blogspot.com/robots.txtWhat is the format of this file?A typical robots.txt file consists of one or more

sets of rules or directions for search engine robots. Each set of rules comprises of two or more instructions written on adjacent new lines. Rule sets are separated by blank new lines.

Here’s a typical example file from a blogspot blog. (Line numbers are added for referencing only. The actual file does not contain them)

1: User-agent: Mediapartners-Google

2: Disallow:

3:

4: User-agent: *

5: Disallow: /search

Lines 1 & 2 form one set of rules and lines 4 & 5 form another set. They are separated by the blank line 3.

A typical rule set starts with a

User-agent: line which identifies one or more robots. Then it will have one or more

Disallow: [or

Allow:] commands, in separate, adjacent new lines.

For example, in the second rule set above:

- User-agent: * - means all user agents

- Disallow: /search - means “don’t crawl any URL that starts with http://.blogspot.com/search/…. In Blogger, this rule will block off all Labels. It’s added by default, because the Label pages just display the individual pages belonging to that label, which the robots will anyway find while crawling the rest of the site.

For more details see:

What is the use of the robots.txt file?As you’ve probably realized by now, the robots.txt file is used to prevent robots from crawling certain areas of your site/blog.

However, remember that not all robots respect this file. For example, spam bots, which scan the web to steal email addresses, can ignore the

Disallow: commands and enter those pages. So the robots.txt file is

not a good way to hide your secure information.

Can I edit the robots.txt file?Unfortunately, Blogger users

cannot edit their robots.txt file. It is maintained by Blogger itself and you cannot upload your own file instead of the default one.

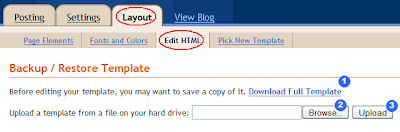

But, if you manage a site where you can upload files to the root domain, then you can use the tools provided by the Google Webmaster Tools (GWT) console to edit the robots.txt file. Once you have

verified your blog with GWT, this is available from the Tools section of the left side navigation bar.

The Analyze robots.txt tool lets you test your rules to see what URLs they actually allow or disallow. This is good to avoid any un-intentional block offs by syntax errors in your file.

The Generate robots.txt tool has a simple user interface to create a file, even if you are not sure of the file’s syntax. Once generated, you can download the file to your machine and then upload it to your site’s root domain.